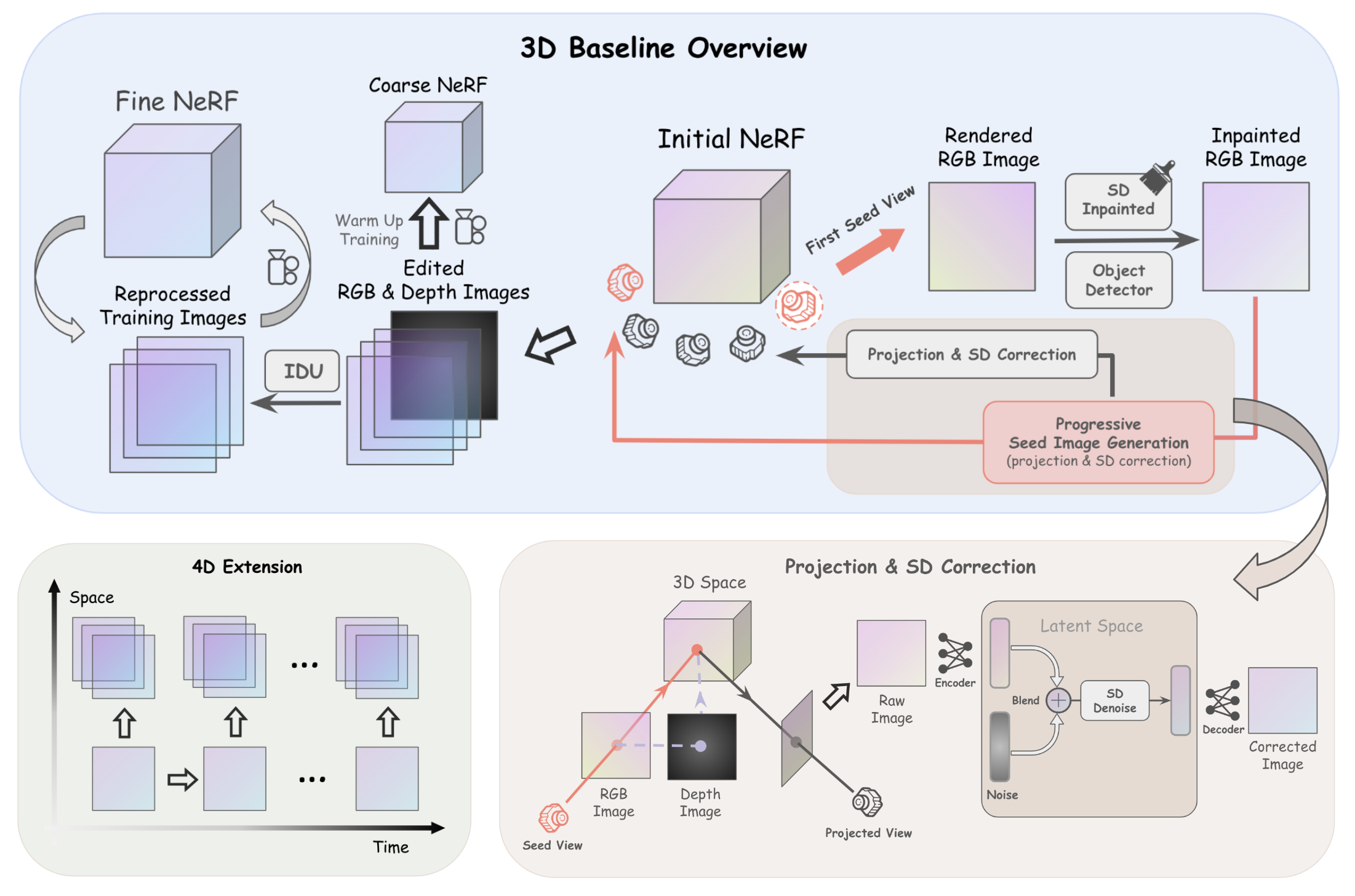

Current Neural Radiance Fields (NeRF) can generate photorealistic novel views. For editing scenes represented by NeRF, with the advent of generative models, this paper proposes Inpaint4DNeRF to capitalize on state-of-the-art stable diffusion models (e.g., ControlNet) for direct generation of the underlying completed background content, regardless of static or dynamic. The key advantages of this generative approach for NeRF inpainting are twofold. First, after rough mask propagation, to complete or fill in previously occluded content, we can individually generate a small subset of completed images with plausible content, called seed images, from which simple 3D geometry proxies can be derived. Second and the remaining problem is thus 3D multiview consistency among all completed images, now guided by the seed images and their 3D proxies. Without other bells and whistles, our generative Inpaint4DNeRF baseline framework is general which can be readily extended to 4D dynamic NeRFs, where temporal consistency can be naturally handled in a similar way as our multiview consistency.

Our generative NeRF inpainting is based on the inpainted image of one training view. The other seed images and training images are obtained by using stable diffusion to hallucinate the corrupted detials of the unproject-projected raw image. These images are then used to finetune the NeRF, with warmup training to get geometric and coarse appearance convergence, followed by iterative training image update to get fine convergence. For 4D extension, we first obtain a temporally consistent inpainted seed video based on the first seed image. Then for each frame, we infer inpainted images on other views by projection and correction, as in our 3D baseline.

@misc{inpaint4dnerf,

title={Inpaint4DNeRF: Promptable Spatio-Temporal NeRF Inpainting with Generative Diffusion Models},

author={Han Jiang and Haosen Sun and Ruoxuan Li and Chi-Keung Tang and Yu-Wing Tai},

year={2023},

eprint={2401.00208},

archivePrefix={arXiv},

primaryClass={cs.CV}

}